start

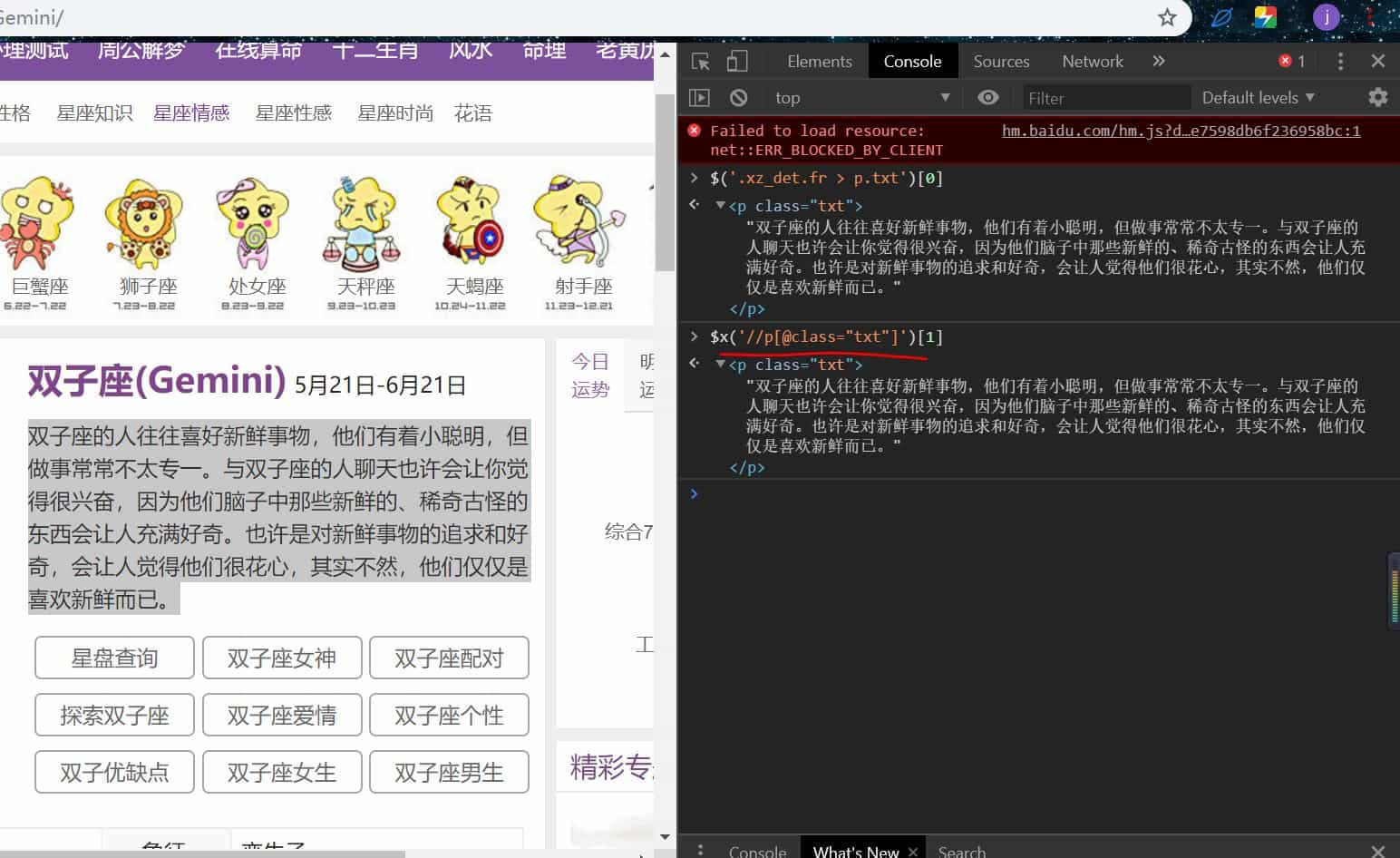

逻辑和前面一样,只不过是改成 xpath 表达式

我们先测试好,xpath 表达式,如图:

html = etree.HTML(response.text)

luck = html.xpath('//p[@class="txt"]/text()')[0]

选取所有(//)标签为 p 的,属性(@)为 class 且值为 txt 的标签,获得其中的文本数据(/text())

在代码中有一个

time.sleep(1)

这一句的意思是,没爬完一页就休息一下,因为,爬虫访问很快,不像人的正常速度,太快,对方察觉了会封 ip,所以我们放慢一点

end

完整代码

import requests

import csv

import time

from lxml import etree

from fake_useragent import UserAgent

def get_html(url):

'''

请求 html

:param url:

:return: 成功返回 html,否则返回 None

'''

count = 0 # 用来计数

while True:

headers = {

'User-agent' : UserAgent().random

}

response = requests.get(url,headers=headers)

if response.status_code == 200:

response.encoding = 'utf-8'

return response

else:

count += 1

if count == 3: # 超过 3 次请求失败则跳过

return

else:

continue

def get_infos(response):

'''

提取信息

:param response:

:return:

'''

html = etree.HTML(response.text)

luck = html.xpath('//p[@class="txt"]/text()')[0]

return luck

def write_txt(_,info):

'''

写入 txt 文件

:param _: 星座名

:param info: 星座运势

:return:

'''

with open('luck.txt','a+',encoding='utf-8') as f:

info = info.strip()

f.write(_ + '\n')

f.write(info + '\n\n')

if __name__ == '__main__':

# 所有 12 星座的名称, 并构造 urls

constellation_name = ['Aries', 'Taurus', 'Gemini', 'Cancer', 'Leo',

'Virgo', 'Libra', 'Scorpio', 'Sagittarius',

'Capricorn', 'Aquarius', 'Pisces']

for _ in constellation_name:

url = 'https://www.d1xz.net/astro/{}/'.format(_)

response = get_html(url)

if response == None:

continue

info = get_infos(response)

write_txt(_,info)

time.sleep(1)